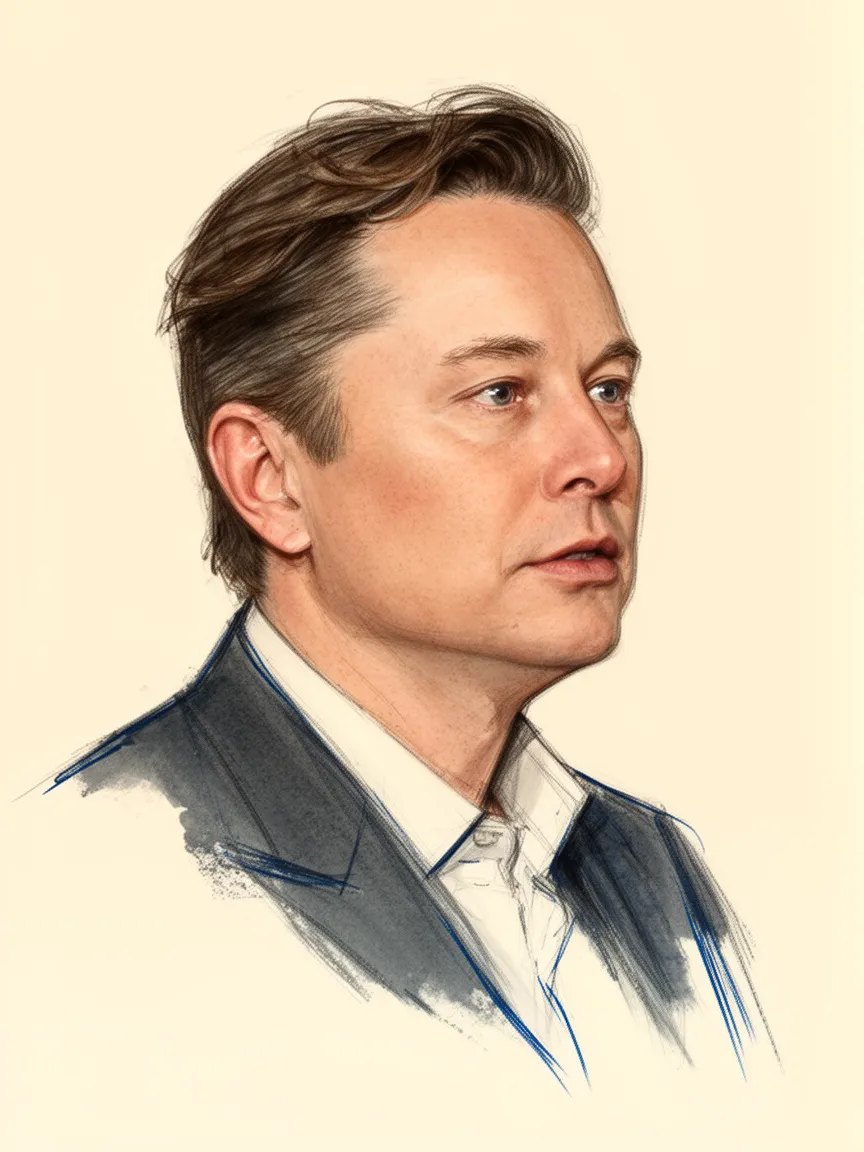

Elon Musk on Dangers of AI

Elon Musk expresses deep concern regarding the potential dangers of Artificial Intelligence, viewing unaligned superintelligence as a risk comparable to or greater than nuclear weapons. He has repeatedly called for urgent regulation and governance of advanced AI development to mitigate catastrophic outcomes for humanity. His history includes co-founding OpenAI with the initial mission of safe AGI development, a path he later diverged from, leading to the creation of his own competing AI company, xAI.

Context

Musk's focus on AI dangers is contextualized by his leadership in technology companies, including Tesla and SpaceX, and his involvement in creating xAI and previously OpenAI. His work in neurotechnology via Neuralink—aimed at integrating the human brain with AI—also suggests a direct engagement with advanced AI interfaces. This dual role as both a developer of frontier technology and a vocal critic underscores the seriousness with which he approaches AI safety.

Timeline

- Musk co-founded OpenAI as a non-profit organization dedicated to developing artificial general intelligence in a manner that is safe and beneficial to humanity.

- Musk left the OpenAI board, citing growing discontent with the organization's direction amidst the acceleration of machine learning advancements.

- Musk launched xAI, an artificial intelligence company, stating its aim is to develop a generative AI program that competes with current offerings like ChatGPT.

Actions Taken

- Founding OrganisationCo-founded OpenAI as a non-profit research company with the stated goal of ensuring artificial general intelligence is safe and beneficial.

- FundingPledged initial funding, reportedly around $50 million, to OpenAI shortly after its founding.

- Founding OrganisationLaunched xAI to develop generative AI in competition with other major labs, following his departure from the OpenAI board.

Key Quotes

With artificial intelligence, we are summoning the demon.

AI is a far greater threat than nuclear weapons.

Criticism

Musk's alleged overuse of certain substances has been linked to concerns from close associates regarding his public behavior, which could impact his judgment on critical issues like AI.

Sources5

Elon Musk co-founded OpenAI to keep an eye on Google

Elon Musk launched xAI to compete with OpenAI and Google

Elon Musk’s warnings about AI: 'Summoning the demon'

Musk on AI: 'Far greater threat than nuclear weapons'

Elon Musk: I have Asperger syndrome (updated autism)

* This is not an exhaustive list of sources.